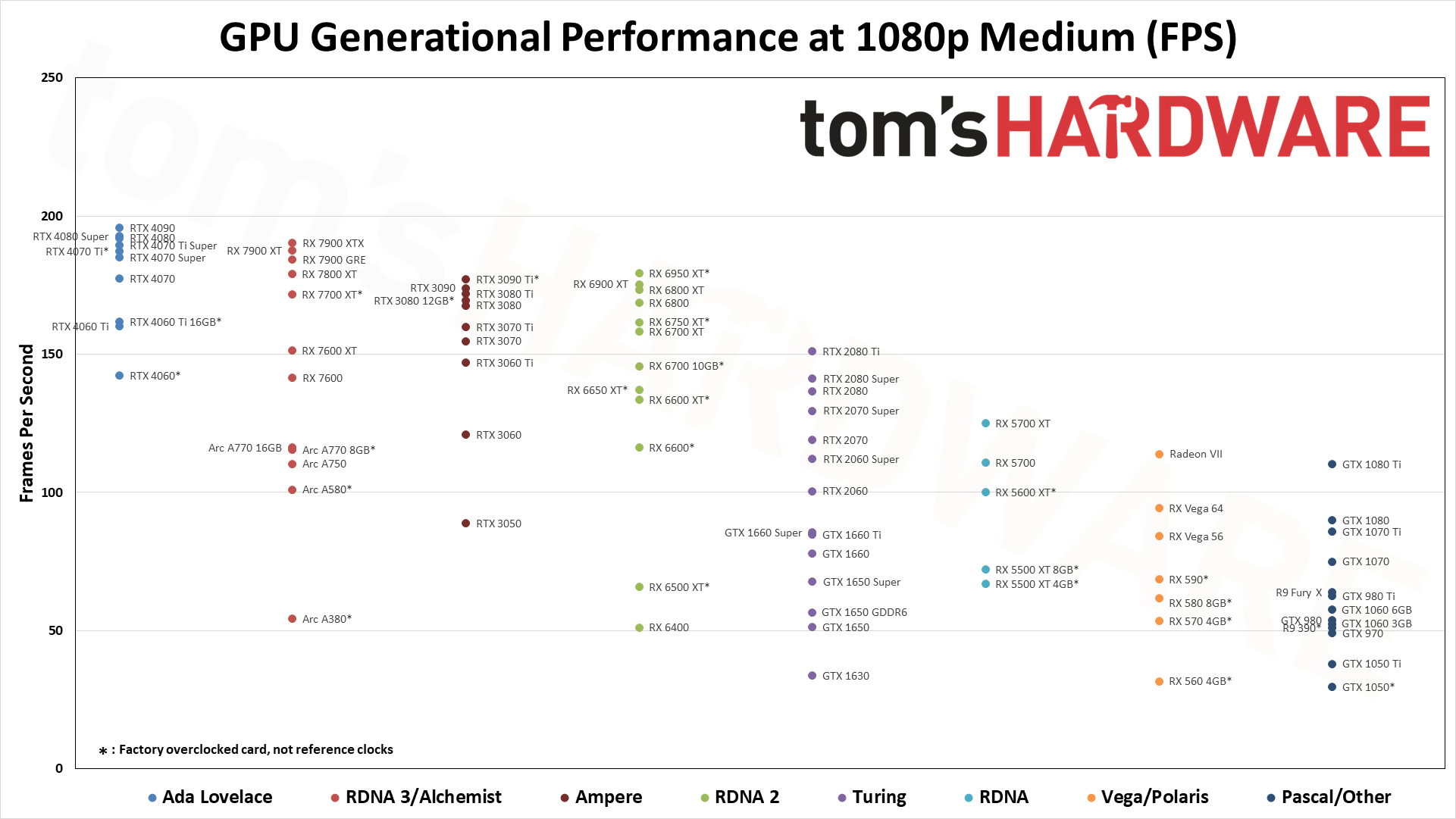

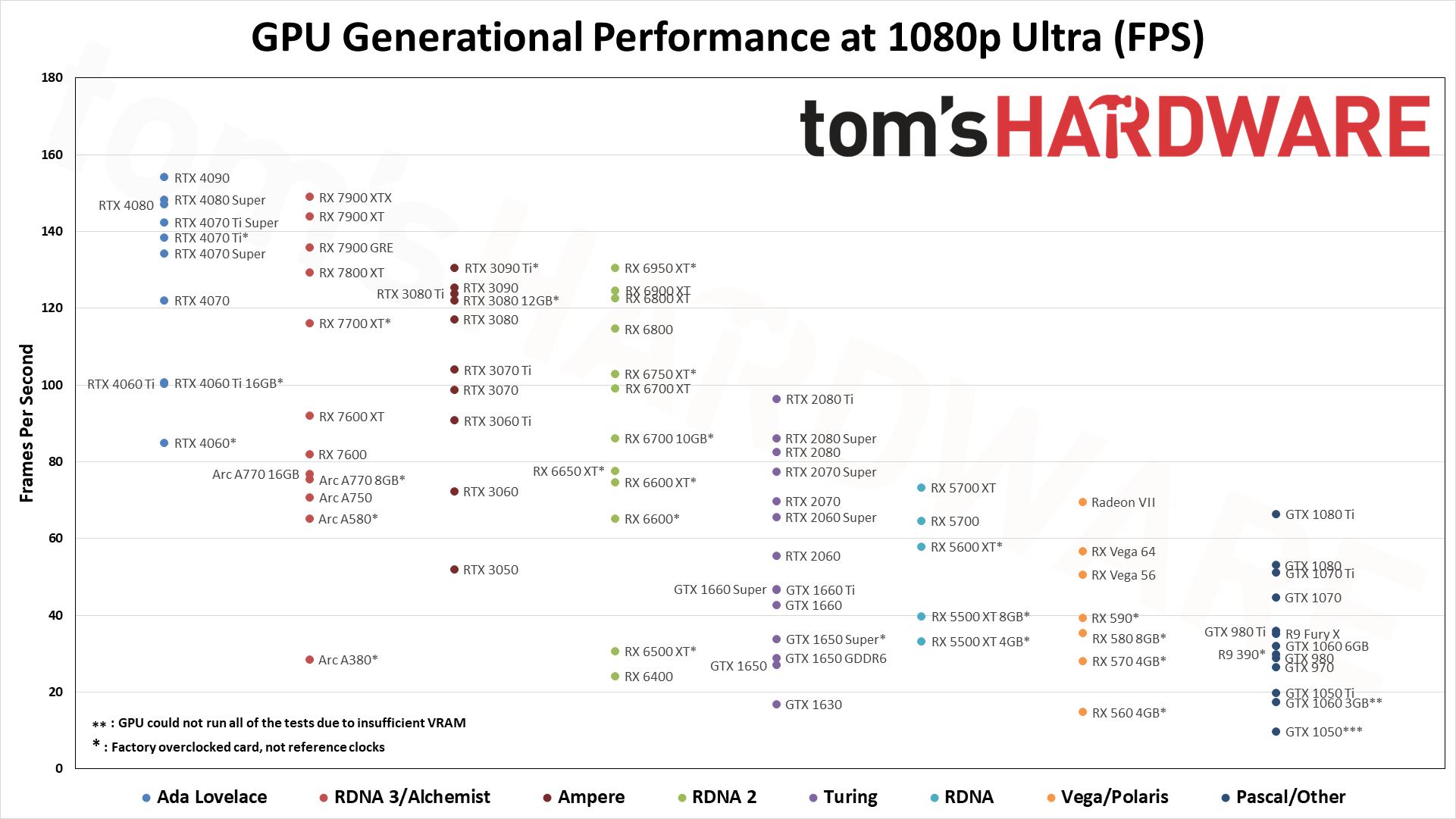

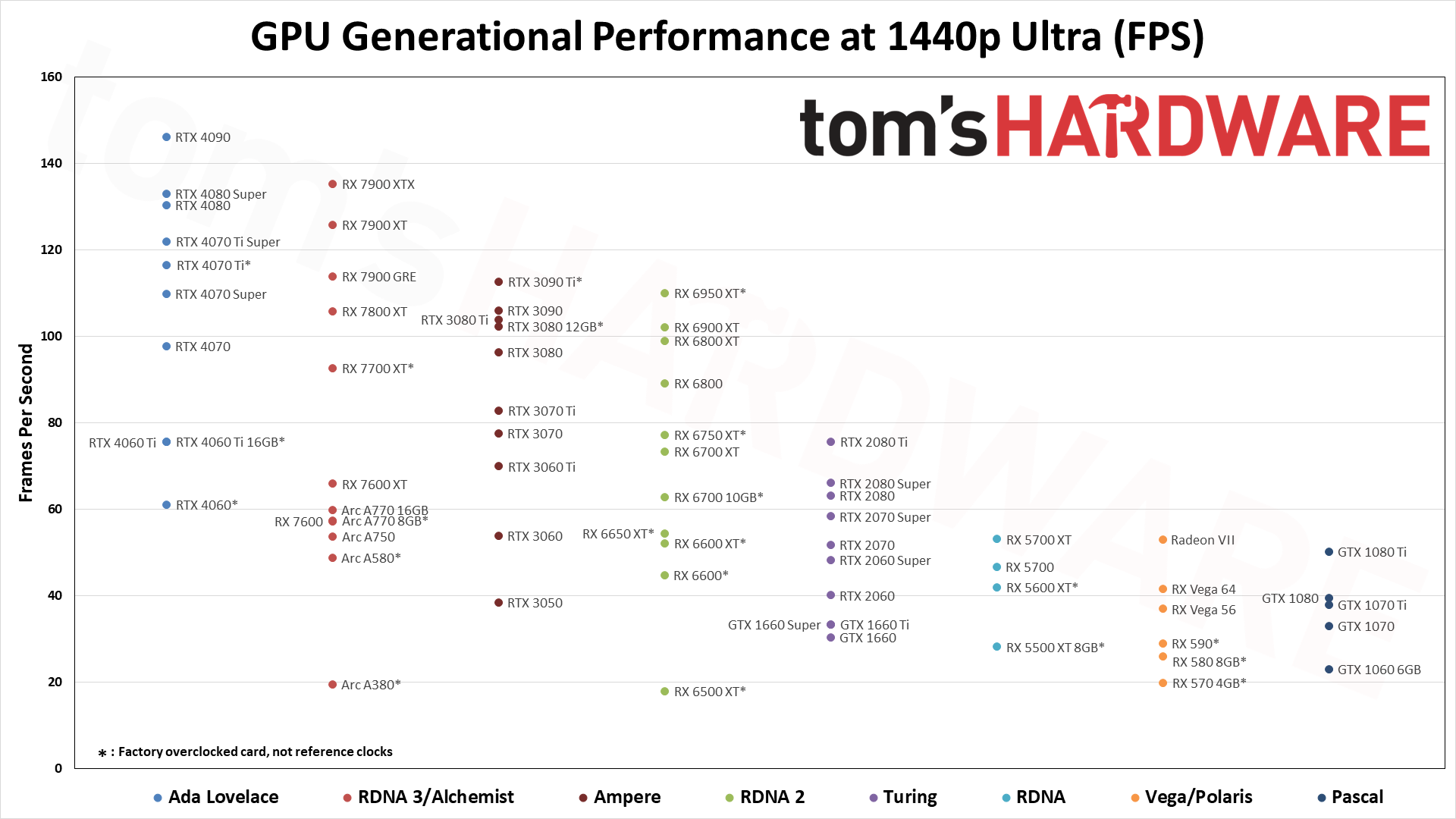

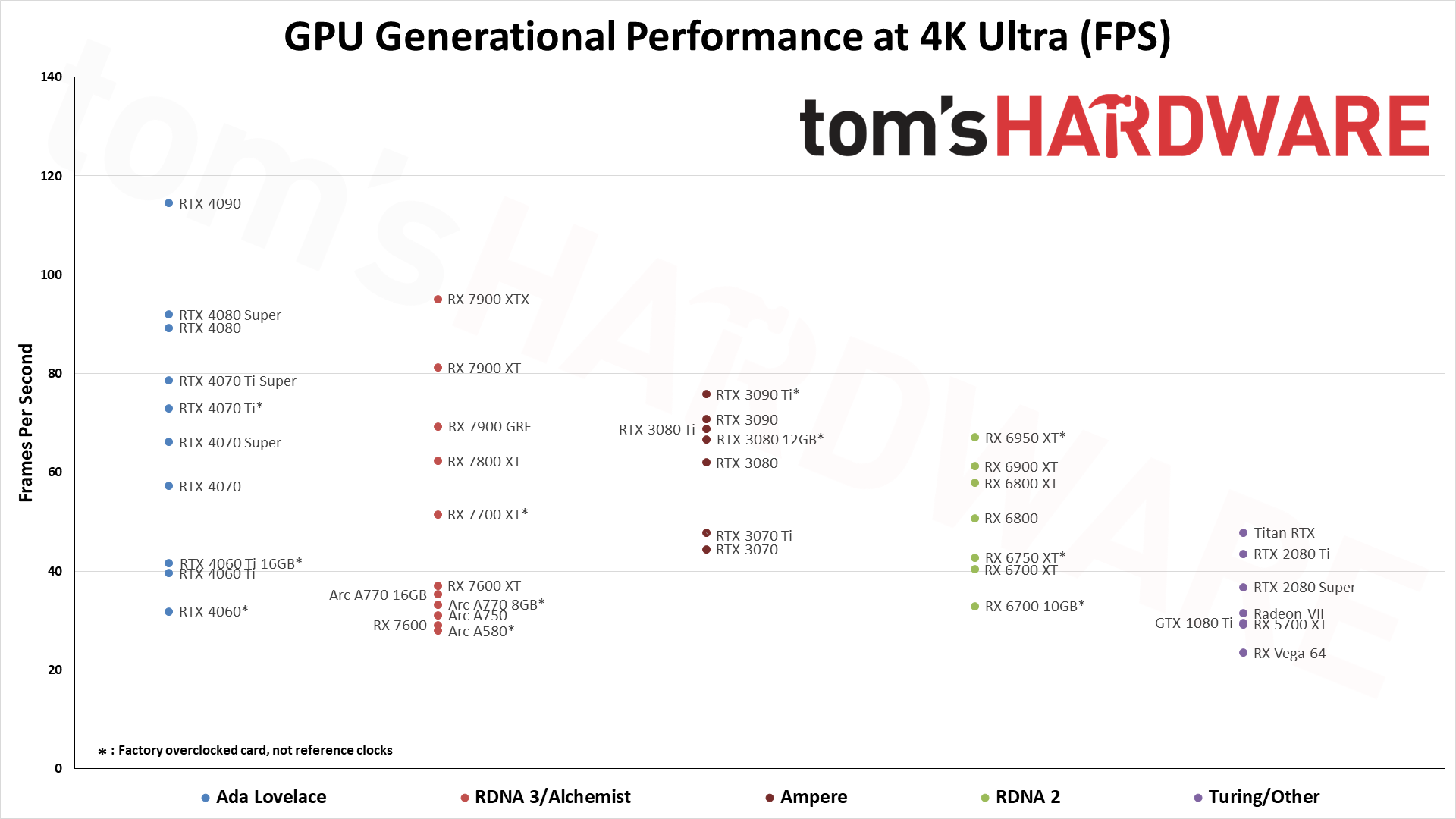

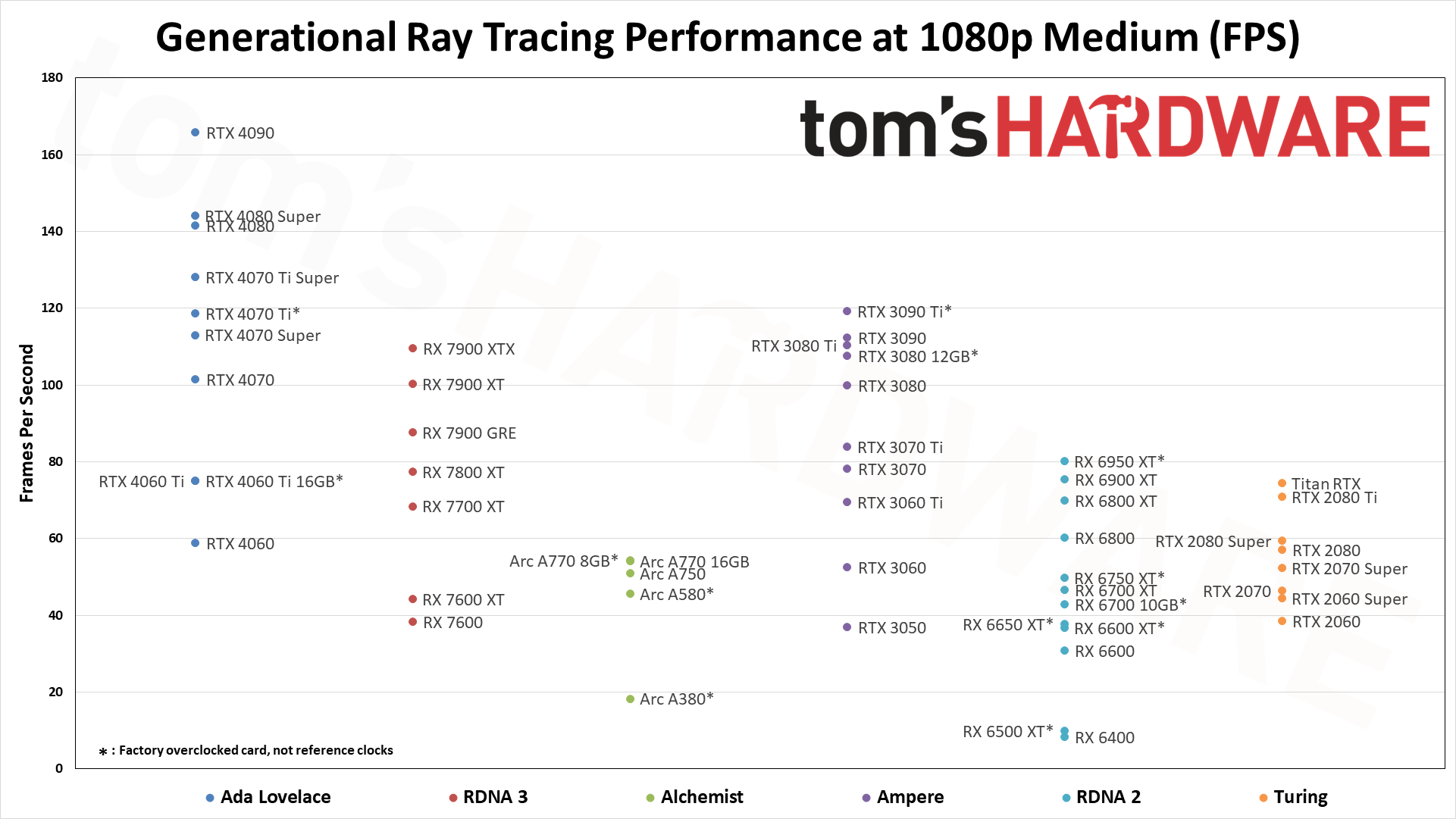

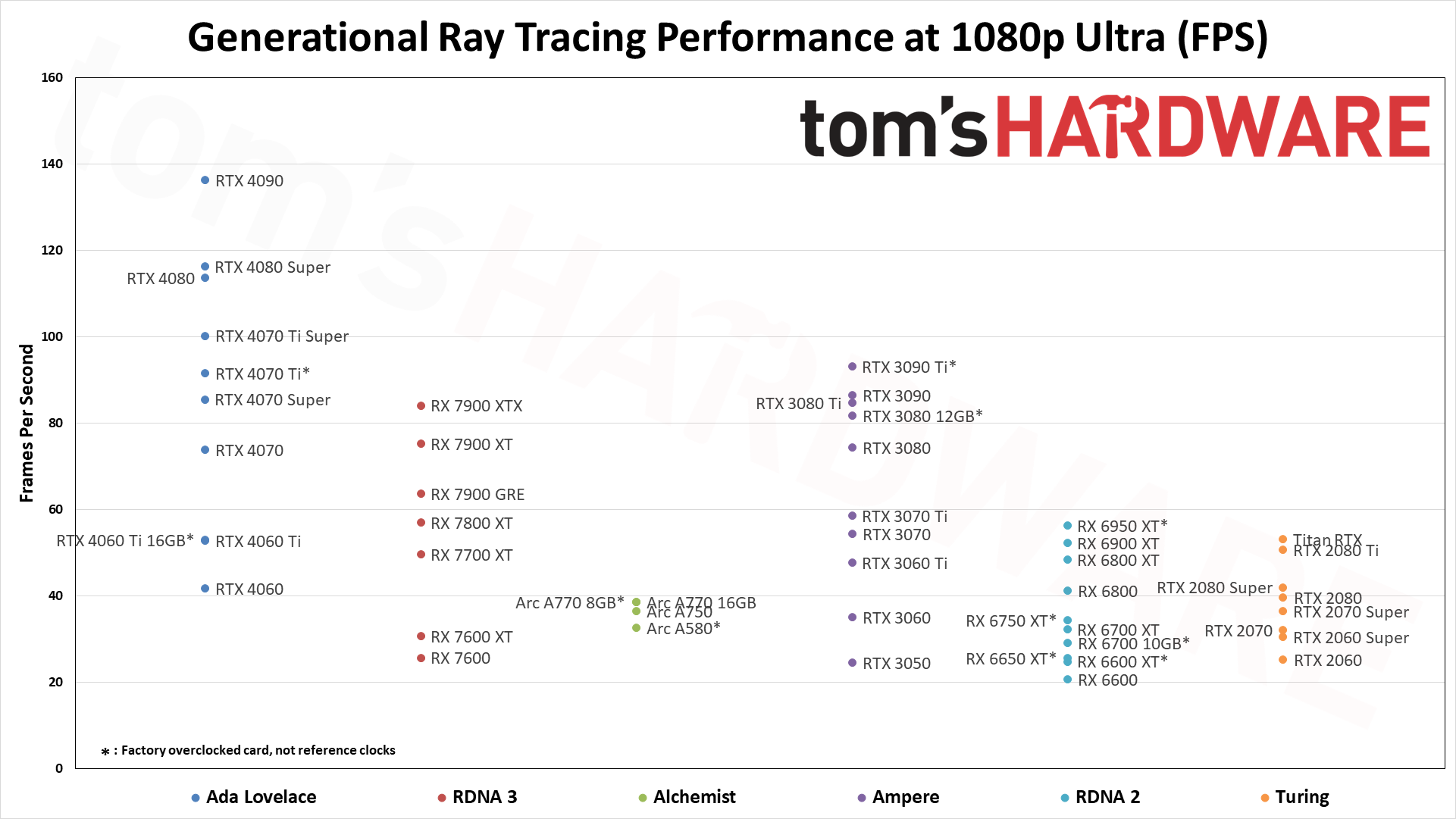

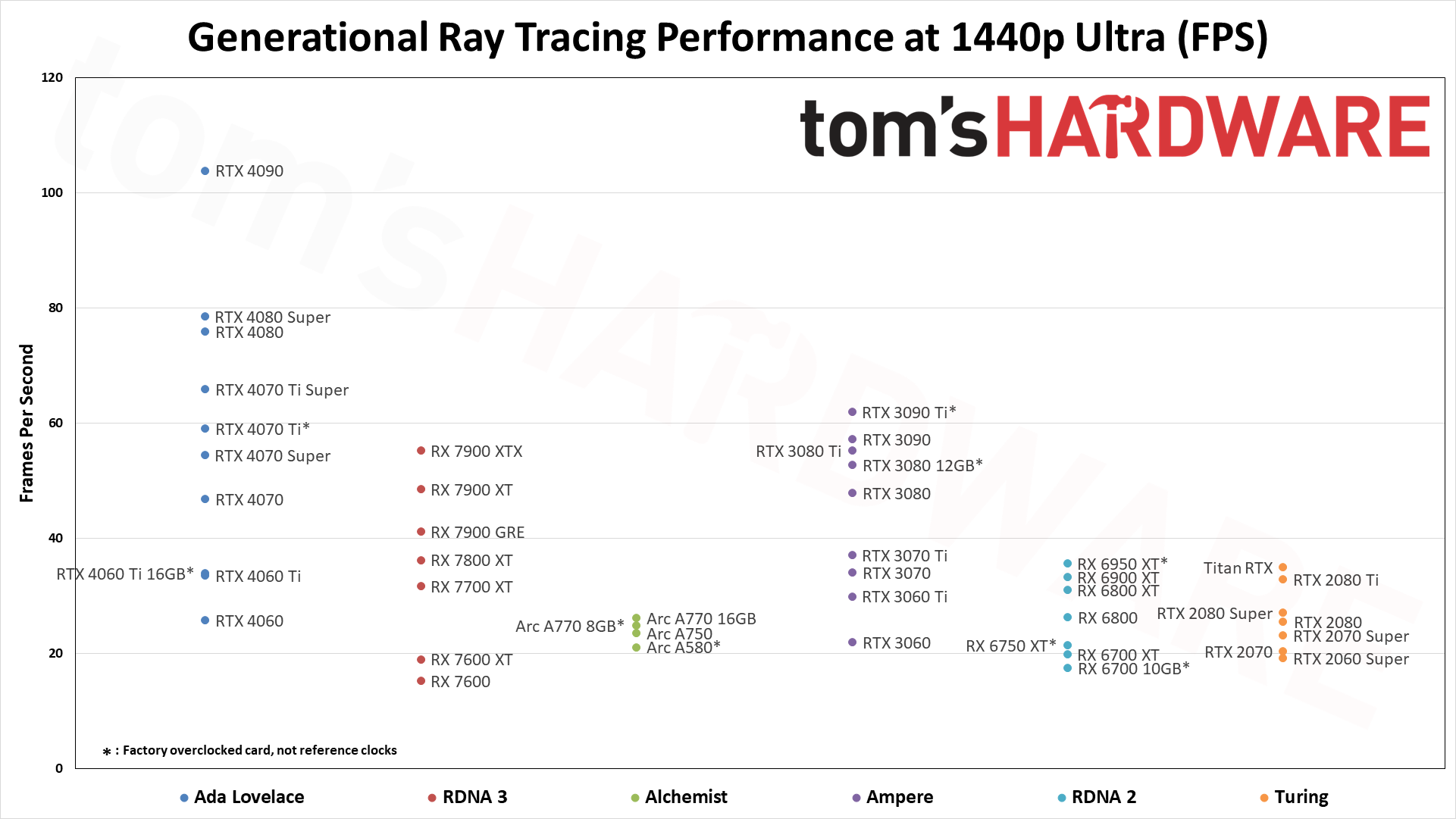

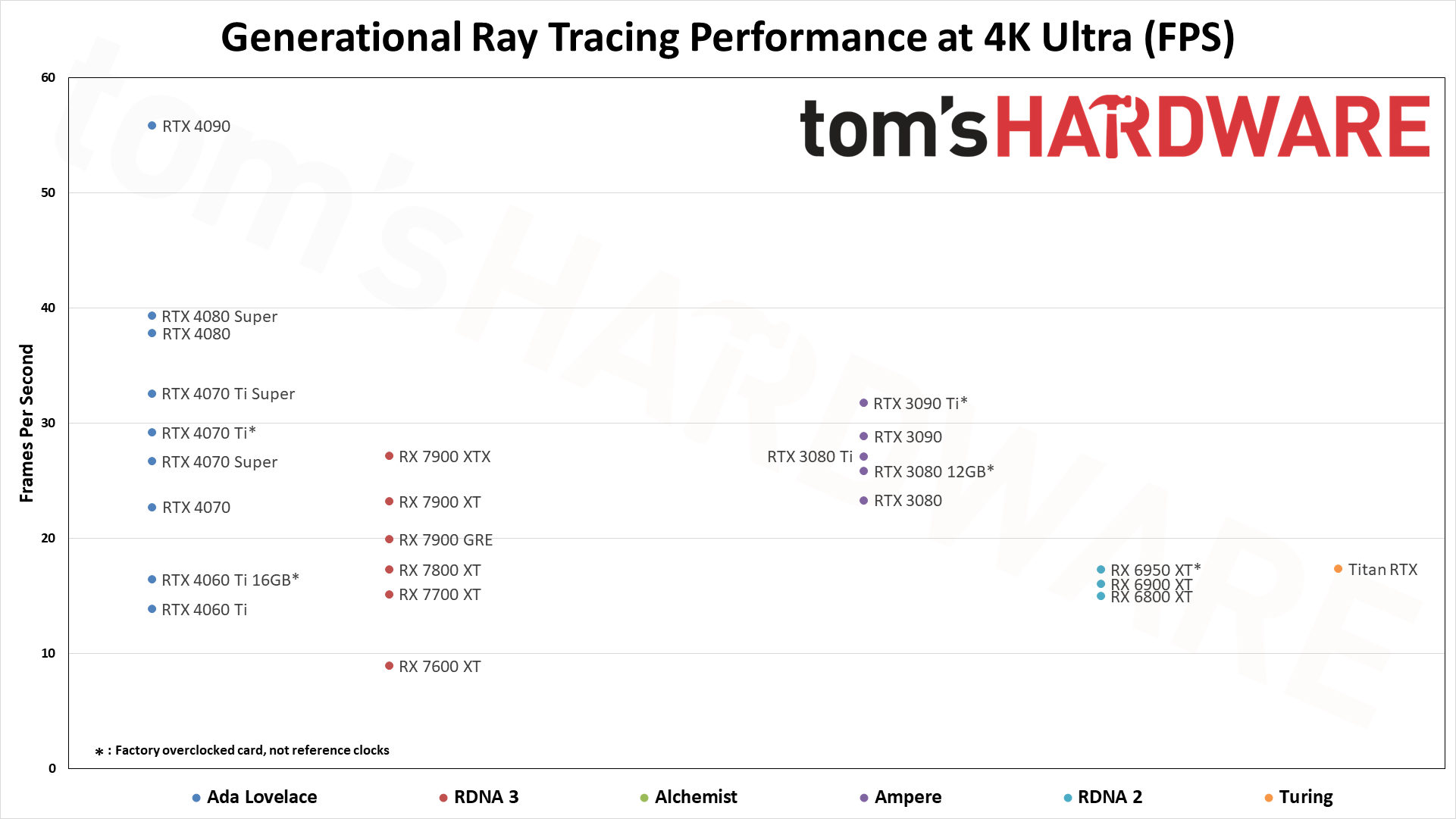

Graphics CardLowest Price1080p Ultra1080p Medium1440p Ultra4K UltraSpecifications (Links to Review)

GeForce RTX 4090$1849100.0% (154.1fps)100.0% (195.7fps)100.0% (146.1fps)100.0% (114.5fps)AD102, 16384 shaders, 2520MHz, 24GB GDDR6X@21Gbps, 1008GB/s, 450W

Radeon RX 7900 XTX$90996.7% (149.0fps)97.2% (190.3fps)92.6% (135.3fps)83.1% (95.1fps)Navi 31, 6144 shaders, 2500MHz, 24GB GDDR6@20Gbps, 960GB/s, 355W

GeForce RTX 4080 Super$99996.2% (148.3fps)98.5% (192.7fps)91.0% (133.0fps)80.3% (91.9fps)AD103, 10240 shaders, 2550MHz, 16GB GDDR6X@23Gbps, 736GB/s, 320W

GeForce RTX 4080$118595.4% (147.0fps)98.1% (192.0fps)89.3% (130.4fps)78.0% (89.3fps)AD103, 9728 shaders, 2505MHz, 16GB

[email protected], 717GB/s, 320W

Radeon RX 7900 XT$69993.4% (143.9fps)95.8% (187.6fps)86.1% (125.9fps)71.0% (81.2fps)Navi 31, 5376 shaders, 2400MHz, 20GB GDDR6@20Gbps, 800GB/s, 315W

GeForce RTX 4070 Ti Super$79992.3% (142.3fps)96.8% (189.4fps)83.5% (122.0fps)68.7% (78.6fps)AD103, 8448 shaders, 2610MHz, 16GB GDDR6X@21Gbps, 672GB/s, 285W

GeForce RTX 4070 Ti$69989.8% (138.3fps)95.7% (187.2fps)79.8% (116.5fps)63.8% (73.0fps)AD104, 7680 shaders, 2610MHz, 12GB GDDR6X@21Gbps, 504GB/s, 285W

Radeon RX 7900 GRE$54988.1% (135.8fps)94.1% (184.3fps)78.0% (113.9fps)60.5% (69.3fps)Navi 31, 5120 shaders, 2245MHz, 16GB GDDR6@18Gbps, 576GB/s, 260W

GeForce RTX 4070 Super$58987.1% (134.2fps)94.6% (185.1fps)75.2% (109.8fps)57.8% (66.1fps)AD104, 7168 shaders, 2475MHz, 12GB GDDR6X@21Gbps, 504GB/s, 220W

Radeon RX 6950 XT$57984.7% (130.5fps)91.7% (179.4fps)75.3% (110.1fps)58.6% (67.1fps)Navi 21, 5120 shaders, 2310MHz, 16GB GDDR6@18Gbps, 576GB/s, 335W

GeForce RTX 3090 Ti$173984.7% (130.5fps)90.5% (177.1fps)77.1% (112.7fps)66.3% (75.9fps)GA102, 10752 shaders, 1860MHz, 24GB GDDR6X@21Gbps, 1008GB/s, 450W

Radeon RX 7800 XT$50983.9% (129.3fps)91.5% (179.1fps)72.4% (105.8fps)54.4% (62.3fps)Navi 32, 3840 shaders, 2430MHz, 16GB

[email protected], 624GB/s, 263W

GeForce RTX 3090$127981.4% (125.5fps)88.9% (174.0fps)72.5% (106.0fps)61.8% (70.7fps)GA102, 10496 shaders, 1695MHz, 24GB

[email protected], 936GB/s, 350W

Radeon RX 6900 XT$77980.9% (124.6fps)89.6% (175.3fps)69.9% (102.1fps)53.5% (61.2fps)Navi 21, 5120 shaders, 2250MHz, 16GB GDDR6@16Gbps, 512GB/s, 300W

GeForce RTX 3080 Ti$108980.4% (123.9fps)87.8% (171.8fps)71.1% (103.9fps)60.1% (68.8fps)GA102, 10240 shaders, 1665MHz, 12GB GDDR6X@19Gbps, 912GB/s, 350W

Radeon RX 6800 XT$45979.6% (122.7fps)88.5% (173.2fps)67.8% (99.0fps)50.6% (57.9fps)Navi 21, 4608 shaders, 2250MHz, 16GB GDDR6@16Gbps, 512GB/s, 300W

GeForce RTX 3080 12GB$99979.2% (122.1fps)86.5% (169.4fps)70.0% (102.3fps)58.3% (66.7fps)GA102, 8960 shaders, 1845MHz, 12GB GDDR6X@19Gbps, 912GB/s, 400W

GeForce RTX 4070$53979.2% (122.0fps)90.7% (177.5fps)66.9% (97.8fps)50.0% (57.2fps)AD104, 5888 shaders, 2475MHz, 12GB GDDR6X@21Gbps, 504GB/s, 200W

GeForce RTX 3080$87976.0% (117.0fps)85.6% (167.6fps)66.0% (96.4fps)54.1% (62.0fps)GA102, 8704 shaders, 1710MHz, 10GB GDDR6X@19Gbps, 760GB/s, 320W

Radeon RX 7700 XT$44975.3% (116.1fps)87.7% (171.6fps)63.4% (92.7fps)45.0% (51.5fps)Navi 32, 3456 shaders, 2544MHz, 12GB GDDR6@18Gbps, 432GB/s, 245W

Radeon RX 6800$39974.4% (114.6fps)86.2% (168.7fps)61.0% (89.2fps)44.3% (50.7fps)Navi 21, 3840 shaders, 2105MHz, 16GB GDDR6@16Gbps, 512GB/s, 250W

GeForce RTX 3070 Ti$59967.5% (104.0fps)81.6% (159.8fps)56.7% (82.8fps)41.7% (47.7fps)GA104, 6144 shaders, 1770MHz, 8GB GDDR6X@19Gbps, 608GB/s, 290W

Radeon RX 6750 XT$35966.8% (102.9fps)82.6% (161.6fps)52.9% (77.2fps)37.4% (42.8fps)Navi 22, 2560 shaders, 2600MHz, 12GB GDDR6@18Gbps, 432GB/s, 250W

GeForce RTX 4060 Ti 16GB$42965.3% (100.6fps)82.6% (161.7fps)51.8% (75.7fps)36.4% (41.6fps)AD106, 4352 shaders, 2535MHz, 16GB GDDR6@18Gbps, 288GB/s, 160W

GeForce RTX 4060 Ti$37465.1% (100.4fps)81.8% (160.1fps)51.7% (75.6fps)34.6% (39.6fps)AD106, 4352 shaders, 2535MHz, 8GB GDDR6@18Gbps, 288GB/s, 160W

Titan RTX 64.5% (99.3fps)80.0% (156.6fps)54.4% (79.5fps)41.8% (47.8fps)TU102, 4608 shaders, 1770MHz, 24GB GDDR6@14Gbps, 672GB/s, 280W

Radeon RX 6700 XT$33964.3% (99.1fps)80.8% (158.1fps)50.3% (73.4fps)35.3% (40.4fps)Navi 22, 2560 shaders, 2581MHz, 12GB GDDR6@16Gbps, 384GB/s, 230W

GeForce RTX 3070$39964.1% (98.8fps)79.1% (154.8fps)53.2% (77.7fps)38.8% (44.4fps)GA104, 5888 shaders, 1725MHz, 8GB GDDR6@14Gbps, 448GB/s, 220W

GeForce RTX 2080 Ti 62.5% (96.3fps)77.2% (151.0fps)51.8% (75.6fps)38.0% (43.5fps)TU102, 4352 shaders, 1545MHz, 11GB GDDR6@14Gbps, 616GB/s, 250W

Radeon RX 7600 XT$31959.7% (91.9fps)77.3% (151.2fps)45.1% (65.9fps)32.4% (37.1fps)Navi 33, 2048 shaders, 2755MHz, 16GB GDDR6@18Gbps, 288GB/s, 190W

GeForce RTX 3060 Ti$44958.9% (90.7fps)75.0% (146.9fps)47.9% (70.0fps) GA104, 4864 shaders, 1665MHz, 8GB GDDR6@14Gbps, 448GB/s, 200W

Radeon RX 6700 10GB$26955.9% (86.1fps)74.4% (145.7fps)43.0% (62.8fps)28.7% (32.9fps)Navi 22, 2304 shaders, 2450MHz, 10GB GDDR6@16Gbps, 320GB/s, 175W

GeForce RTX 2080 Super 55.8% (86.0fps)72.2% (141.3fps)45.2% (66.1fps)32.1% (36.7fps)TU104, 3072 shaders, 1815MHz, 8GB

[email protected], 496GB/s, 250W

GeForce RTX 4060$29455.1% (84.9fps)72.7% (142.3fps)41.9% (61.2fps)27.8% (31.9fps)AD107, 3072 shaders, 2460MHz, 8GB GDDR6@17Gbps, 272GB/s, 115W

GeForce RTX 2080 53.5% (82.5fps)69.8% (136.7fps)43.2% (63.2fps) TU104, 2944 shaders, 1710MHz, 8GB GDDR6@14Gbps, 448GB/s, 215W

Radeon RX 7600$26953.2% (82.0fps)72.3% (141.4fps)39.2% (57.3fps)25.4% (29.1fps)Navi 33, 2048 shaders, 2655MHz, 8GB GDDR6@18Gbps, 288GB/s, 165W

Radeon RX 6650 XT$22950.4% (77.7fps)70.0% (137.1fps)37.3% (54.5fps) Navi 23, 2048 shaders, 2635MHz, 8GB GDDR6@18Gbps, 280GB/s, 180W

GeForce RTX 2070 Super 50.3% (77.4fps)66.2% (129.6fps)40.0% (58.4fps) TU104, 2560 shaders, 1770MHz, 8GB GDDR6@14Gbps, 448GB/s, 215W

Intel Arc A770 16GB$28949.9% (76.9fps)59.4% (116.4fps)41.0% (59.8fps)30.8% (35.3fps)ACM-G10, 4096 shaders, 2400MHz, 16GB

[email protected], 560GB/s, 225W

Intel Arc A770 8GB$34348.9% (75.3fps)59.0% (115.5fps)39.3% (57.5fps)29.0% (33.2fps)ACM-G10, 4096 shaders, 2400MHz, 8GB GDDR6@16Gbps, 512GB/s, 225W

Radeon RX 6600 XT$39948.5% (74.7fps)68.2% (133.5fps)35.7% (52.2fps) Navi 23, 2048 shaders, 2589MHz, 8GB GDDR6@16Gbps, 256GB/s, 160W

Radeon RX 5700 XT 47.6% (73.3fps)63.8% (124.9fps)36.3% (53.1fps)25.6% (29.3fps)Navi 10, 2560 shaders, 1905MHz, 8GB GDDR6@14Gbps, 448GB/s, 225W

GeForce RTX 3060$29946.9% (72.3fps)61.8% (121.0fps)36.9% (54.0fps) GA106, 3584 shaders, 1777MHz, 12GB GDDR6@15Gbps, 360GB/s, 170W

Intel Arc A750$23945.9% (70.8fps)56.4% (110.4fps)36.7% (53.7fps)27.2% (31.1fps)ACM-G10, 3584 shaders, 2350MHz, 8GB GDDR6@16Gbps, 512GB/s, 225W

GeForce RTX 2070 45.3% (69.8fps)60.8% (119.1fps)35.5% (51.8fps) TU106, 2304 shaders, 1620MHz, 8GB GDDR6@14Gbps, 448GB/s, 175W

Radeon VII 45.1% (69.5fps)58.2% (113.9fps)36.3% (53.0fps)27.5% (31.5fps)Vega 20, 3840 shaders, 1750MHz, 16GB

[email protected], 1024GB/s, 300W

GeForce GTX 1080 Ti 43.1% (66.4fps)56.3% (110.2fps)34.4% (50.2fps)25.8% (29.5fps)GP102, 3584 shaders, 1582MHz, 11GB GDDR5X@11Gbps, 484GB/s, 250W

GeForce RTX 2060 Super 42.5% (65.5fps)57.2% (112.0fps)33.1% (48.3fps) TU106, 2176 shaders, 1650MHz, 8GB GDDR6@14Gbps, 448GB/s, 175W

Radeon RX 6600$19942.3% (65.2fps)59.3% (116.2fps)30.6% (44.8fps) Navi 23, 1792 shaders, 2491MHz, 8GB GDDR6@14Gbps, 224GB/s, 132W

Intel Arc A580$16942.3% (65.1fps)51.6% (101.1fps)33.4% (48.8fps)24.4% (27.9fps)ACM-G10, 3072 shaders, 2300MHz, 8GB GDDR6@16Gbps, 512GB/s, 185W

Radeon RX 5700 41.9% (64.5fps)56.6% (110.8fps)31.9% (46.7fps) Navi 10, 2304 shaders, 1725MHz, 8GB GDDR6@14Gbps, 448GB/s, 180W

Radeon RX 5600 XT 37.5% (57.8fps)51.1% (100.0fps)28.8% (42.0fps) Navi 10, 2304 shaders, 1750MHz, 8GB GDDR6@14Gbps, 336GB/s, 160W

Radeon RX Vega 64 36.8% (56.7fps)48.2% (94.3fps)28.5% (41.6fps)20.5% (23.5fps)Vega 10, 4096 shaders, 1546MHz, 8GB

[email protected], 484GB/s, 295W

GeForce RTX 2060 36.0% (55.5fps)51.4% (100.5fps)27.5% (40.1fps) TU106, 1920 shaders, 1680MHz, 6GB GDDR6@14Gbps, 336GB/s, 160W

GeForce GTX 1080 34.4% (53.0fps)45.9% (89.9fps)27.0% (39.4fps) GP104, 2560 shaders, 1733MHz, 8GB GDDR5X@10Gbps, 320GB/s, 180W

GeForce RTX 3050$22433.7% (51.9fps)45.4% (88.8fps)26.4% (38.5fps) GA106, 2560 shaders, 1777MHz, 8GB GDDR6@14Gbps, 224GB/s, 130W

GeForce GTX 1070 Ti 33.1% (51.1fps)43.8% (85.7fps)26.0% (37.9fps) GP104, 2432 shaders, 1683MHz, 8GB GDDR5@8Gbps, 256GB/s, 180W

Radeon RX Vega 56 32.8% (50.6fps)43.0% (84.2fps)25.3% (37.0fps) Vega 10, 3584 shaders, 1471MHz, 8GB

[email protected], 410GB/s, 210W

GeForce GTX 1660 Super 30.3% (46.8fps)43.7% (85.5fps)22.8% (33.3fps) TU116, 1408 shaders, 1785MHz, 6GB GDDR6@14Gbps, 336GB/s, 125W

GeForce GTX 1660 Ti 30.3% (46.6fps)43.3% (84.8fps)22.8% (33.3fps) TU116, 1536 shaders, 1770MHz, 6GB GDDR6@12Gbps, 288GB/s, 120W

GeForce GTX 1070 29.0% (44.7fps)38.3% (75.0fps)22.7% (33.1fps) GP104, 1920 shaders, 1683MHz, 8GB GDDR5@8Gbps, 256GB/s, 150W

GeForce GTX 1660 27.7% (42.6fps)39.7% (77.8fps)20.8% (30.3fps) TU116, 1408 shaders, 1785MHz, 6GB GDDR5@8Gbps, 192GB/s, 120W

Radeon RX 5500 XT 8GB 25.7% (39.7fps)36.8% (72.1fps)19.3% (28.2fps) Navi 14, 1408 shaders, 1845MHz, 8GB GDDR6@14Gbps, 224GB/s, 130W

Radeon RX 590 25.5% (39.3fps)35.0% (68.5fps)19.9% (29.0fps) Polaris 30, 2304 shaders, 1545MHz, 8GB GDDR5@8Gbps, 256GB/s, 225W

GeForce GTX 980 Ti 23.3% (35.9fps)32.0% (62.6fps)18.2% (26.6fps) GM200, 2816 shaders, 1075MHz, 6GB GDDR5@7Gbps, 336GB/s, 250W

Radeon RX 580 8GB 22.9% (35.3fps)31.5% (61.7fps)17.8% (26.0fps) Polaris 20, 2304 shaders, 1340MHz, 8GB GDDR5@8Gbps, 256GB/s, 185W

Radeon R9 Fury X 22.9% (35.2fps)32.6% (63.8fps) Fiji, 4096 shaders, 1050MHz, 4GB HBM2@2Gbps, 512GB/s, 275W

GeForce GTX 1650 Super 22.0% (33.9fps)34.6% (67.7fps)14.5% (21.2fps) TU116, 1280 shaders, 1725MHz, 4GB GDDR6@12Gbps, 192GB/s, 100W

Radeon RX 5500 XT 4GB 21.6% (33.3fps)34.1% (66.8fps) Navi 14, 1408 shaders, 1845MHz, 4GB GDDR6@14Gbps, 224GB/s, 130W

GeForce GTX 1060 6GB 20.8% (32.1fps)29.5% (57.7fps)15.8% (23.0fps) GP106, 1280 shaders, 1708MHz, 6GB GDDR5@8Gbps, 192GB/s, 120W

Radeon RX 6500 XT$15919.9% (30.6fps)33.6% (65.8fps)12.3% (18.0fps) Navi 24, 1024 shaders, 2815MHz, 4GB GDDR6@18Gbps, 144GB/s, 107W

Radeon R9 390 19.3% (29.8fps)26.1% (51.1fps) Grenada, 2560 shaders, 1000MHz, 8GB GDDR5@6Gbps, 384GB/s, 275W

GeForce GTX 980 18.7% (28.9fps)27.4% (53.6fps) GM204, 2048 shaders, 1216MHz, 4GB GDDR5@7Gbps, 256GB/s, 165W

GeForce GTX 1650 GDDR6 18.7% (28.8fps)28.9% (56.6fps) TU117, 896 shaders, 1590MHz, 4GB GDDR6@12Gbps, 192GB/s, 75W

Intel Arc A380$11918.4% (28.4fps)27.7% (54.3fps)13.3% (19.5fps) ACM-G11, 1024 shaders, 2450MHz, 6GB

[email protected], 186GB/s, 75W

Radeon RX 570 4GB 18.2% (28.1fps)27.4% (53.6fps)13.6% (19.9fps) Polaris 20, 2048 shaders, 1244MHz, 4GB GDDR5@7Gbps, 224GB/s, 150W

GeForce GTX 1650 17.5% (27.0fps)26.2% (51.3fps) TU117, 896 shaders, 1665MHz, 4GB GDDR5@8Gbps, 128GB/s, 75W

GeForce GTX 970 17.2% (26.5fps)25.0% (49.0fps) GM204, 1664 shaders, 1178MHz, 4GB GDDR5@7Gbps, 256GB/s, 145W

Radeon RX 6400$12415.7% (24.1fps)26.1% (51.1fps) Navi 24, 768 shaders, 2321MHz, 4GB GDDR6@16Gbps, 128GB/s, 53W

GeForce GTX 1050 Ti 12.9% (19.8fps)19.4% (38.0fps) GP107, 768 shaders, 1392MHz, 4GB GDDR5@7Gbps, 112GB/s, 75W

GeForce GTX 1060 3GB 26.8% (52.5fps) GP106, 1152 shaders, 1708MHz, 3GB GDDR5@8Gbps, 192GB/s, 120W

GeForce GTX 1630 10.9% (16.9fps)17.3% (33.8fps) TU117, 512 shaders, 1785MHz, 4GB GDDR6@12Gbps, 96GB/s, 75W

Radeon RX 560 4GB 9.6% (14.7fps)16.2% (31.7fps) Baffin, 1024 shaders, 1275MHz, 4GB GDDR5@7Gbps, 112GB/s, 60-80W

GeForce GTX 1050 15.2% (29.7fps) GP107, 640 shaders, 1455MHz, 2GB GDDR5@7Gbps, 112GB/s, 75W

Radeon RX 550 4GB 10.0% (19.5fps) Lexa, 640 shaders, 1183MHz, 4GB GDDR5@7Gbps, 112GB/s, 50W

GeForce GT 1030 7.5% (14.6fps) GP108, 384 shaders, 1468MHz, 2GB GDDR5@6Gbps, 48GB/s, 30W

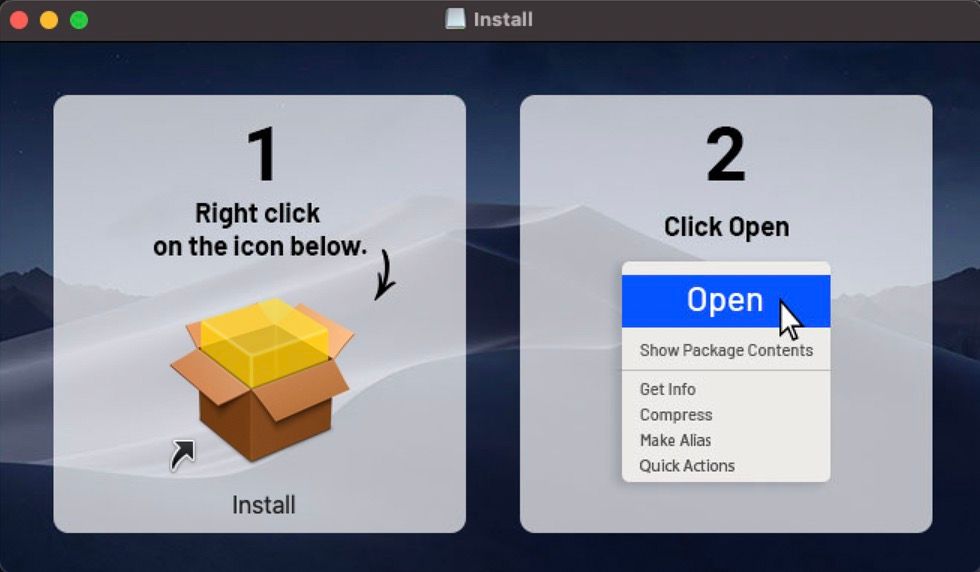

I wouldn’t put it past my 79-year-old grandmother to be able to do this. Image of Shlayer malware from Jamf.

I wouldn’t put it past my 79-year-old grandmother to be able to do this. Image of Shlayer malware from Jamf.